One branch of 20th century art aimed, specifically, to resemble nothing. Representation and symbolism were abandoned in favour of formal perfection. In photographic media, where abstraction is more work than representation, reference was often undermined rather than destroyed. Photographic imagery was treated as if abstract - as if it were only a field of colour and texture, not a vista of objects. Many films were made in this vein - some of them by me - but space, narrative and symbolism would always sneak back in, because people love those things. This is good, but it leaves the question unanswered - what would a truly two dimensional film look like?

To answer this, I believe, a robot must be created that combines a strong sense of vision with no concept of space. After many years studying computers, I have drawn up a feasible design. The robot described herein will look at the world as a shifting plane of colour and construct films out of what it sees, according to formal criteria of its own devising. It will work 24 hours a day and release finished works once or twice a week, which will be available over the internet and on video cassette. For some part of its life, it will be robot-in-residence at the Film Archive's new Mediaplex, and there it will show its work in progress to visitors.

From here on in, this document consists mainly of implementation details. The budget is towards the end.

This section is something of a glossary, describing some terms that might be used later.

Neural networks are like small simulations of brains. They are good at recognising patterns, but terrible at being certain about things. Neural networks learn through training and experience, but if the training data is complex or inconsistent, they might learn entirely the wrong things. The robot will contain several separate neural networks in charge of different aspects.

Vector space searches will be used to compare all the different video snippets, quickly finding patterns and similarities. Colour field and motion analysis might reveal, say, 100 quantifiable ways in which shots differ. This can be looked at a 100 dimensional vector space. related images will be close together in that space. As a video sequence progresses, it will carve a trajectory through this space. This trajectory could be likened to a narrative, and the coercion of shot sequences into desirable vector paths will be a means by which the film is directed.

Multiple feedback loops. These are best avoided if you want a system to behave predictably. The converse holds. For the robot to truly be making its own work, I believe it is important for me to relinquish understanding of it as a complete system, hence the many feedback loops and neural networks.

Image analogies will allow the robot to transfer textures and colours between images. This is one of the techniques the robot will use to synthesize its own images from its experience. The robot thus gathers flawed and false memories, which are important in the creative process. These techniques will be applied very lightly so as to avoid hideous VJ effects.

Tree searches - in order to properly plan its films, the robot has to look some time ahead. It will use a principal variation tree search, which reduces the task of looking through millions of variations, by only examining at the best ones. Whatever the robot thinks "best" is.

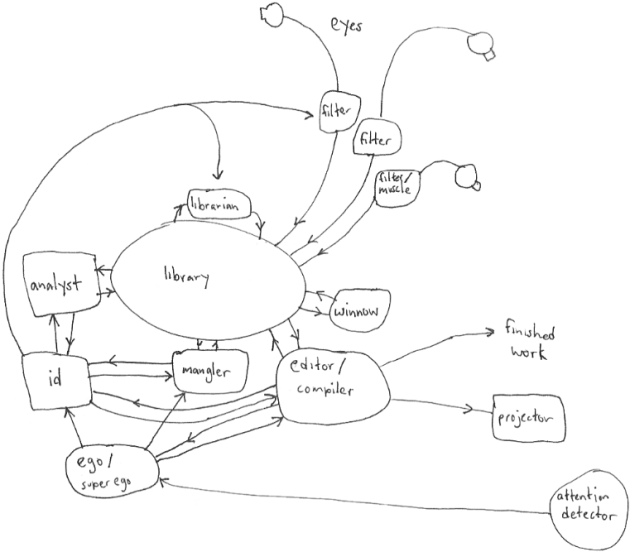

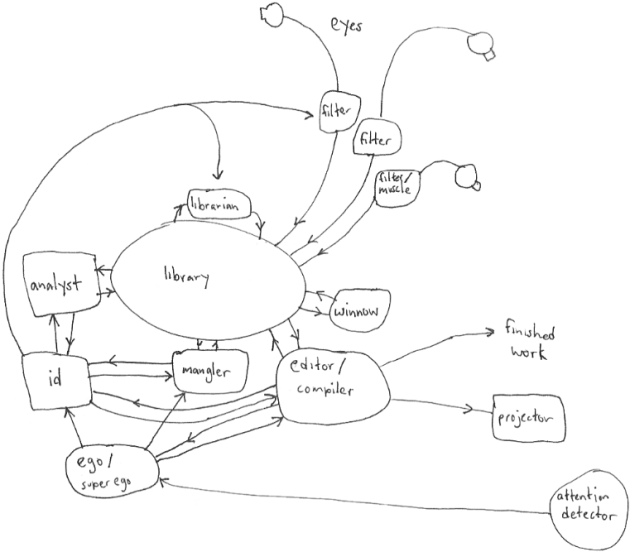

Evolutionary video pool The robot's memory ("library" in the diagram below) can be likened to a genetic pool, where survival fitness entails being in some way compelling to the robot. The act of dwelling on an image reproduces it in the library, while the least favoured images are periodically discarded. Over the long run, the robot's work will reveal these obsessions, as recurring motifs corresponding to the fittest images in the memory pool.

The eyes look at the world, and perform some basic analysis. Some eyes have optic filters and others have muscles, for reasons explained below.

The librarian records the seen video, and catalogs it along with information about its properties. It maintains a database linking image files to analyses of their properties. Similarities and contrasts can thus be quickly detected.

Every so often, when the library is nearly full, the winnow sifts through and discards the least wanted images. The library will hold about 40 hours of video, and the winnow might get rid of an hours worth at a time.

Theanalyst looks closely at the stored and incoming video and prepares a vectorial analysis which is handed to the librarian. The analyst uses a neural network and various image decomposition tools, and receives feedback from the id. Previously analysed video is periodically reexamined, because the robot is always changing its mind about how to see.

Themangler ormunger copies chunks of interesting video and performs complex image processing tricks on it such as rotations, colour correction, image analogies. The mangler uses neural networks and receives feedback from the ego and id, but its operations will be slightly random - unwanted imagery may spring unbidden. It works slowly - some operations will take hours.

The id monitors the other components and tells them how they're doing according to inexplicable longings developed in its neural network. It receives feedback from the ego, but mostly just cares about itself. The id will require quite a bit of initial training.

The ego looks to see if anyone is watching and rewards the other components accordingly. It has a sub-circuit called the superego who's role is mainly to get bored and complain if monotony occurs.

The attention detector is only of interest to the ego. It will consist of a camera and some motion detection/video analysis software, and will focus on the area immediately in front of the projector screen. When people stand there, it excites the ego. In this way, the robot receives ongoing, hap-hazard, training throughout its life. The attention detector will often get things wrong - people might be facing the other way for example - but that just adds to the fun.

The projector connects to a video projector. It is not really a thinking part of the robot, but provides a view into the internal processes.

The compiler examines the stored video, trying out different sequences and combinations of the best bits, based on the judgment of the other components. This experimentation is fed to the projector, and good combinations are marked and combined in the library. The compiler is looking for perfect enough sequences to be called a finished film - these are created every three or four days, and will typically be between two and seven minutes long. The form and composition of these films will be determined by the quality of the shape they make in vector space. You could say the compiler is a writer/editor, while the id is a simultaneously domineering and aloof director.

Each of these components will be constructed individually and thoroughly tested. Then they will be combined and the system tested as a whole. It's likely that the components will need tweaking for the system to work - they will be built to allow this.

The independent, self-contained robot is an anthropomorphic design error. Moving robots get flat batteries. Bodily integrity is of no particular value - pieces could be scattered world wide and the robot would still work. A well designed robot is static and shapeless. Nevertheless, and despite the symbolic problems with physical presence (considering the raison d'être), the robot will have a representative body in the form of one of its eyes mounted on a swivel, on a stand. In terms of the visitor experience, this is the robot.

To give the robot an interesting visual experience, the eyes must escape the room. Since sustained self-propulsion is impossible, the eyes will be parasitic upon the motion of others. People could be used as hosts, but with the best intentions they would interfere in the film making process. So the eyes will only use the motion of other machines. The ideal machines are probably buses, due to their regular routes, slowish pace, and excellent vantage point.

The eyes will need to be fitted with wireless networking devices. When the bus comes close to a wireless network node on Citylink's Cafenet ^1^ public access network, the eye will upload video across the network and into the robot. But because the bus will move on before the entire trip can be uploaded, the bus-clinging eyes will have filters built into them that perform winnowing on the fly, leaving only the good bits to be transferred. Thus the mobile eyes are a bit like the entire robot in miniature.

Of course, eyes aren't strictly necessary. The robot could pick up video from TV or webcasts. That would no doubt be interesting, but is a different experiment. Having said that though, the software I write will be available for others to use, so near clones of this robot could be made that investigate media as a flat surface.

In the beginning, I will train the robot components individually with segments of video designed around their specific tasks, to get them to a point from which they can interact. Training the id will be the most involved and interesting task. I'll try to feed it interestingly textured urban landscapes such as Michael Brown's super eight films, and some of my recent 16 mm black and white films. Incidentally, there would be no copyright issues if I chose to use, say, The Lord of The Rings in training - the training material just leaves an imprint, no residue.

When the parts are brought together, they will train each other to some extent, and in settling into whatever equilibrium or disequilibrium is their fate, become a whole robot.

The robot will be based on existing software as much as possible, but large portions will need to be written from scratch. Not much work has been done in this area before.

The core will be Debian GNU/Linux^2^, a robust and capable operating system. Most robot components will be autonomous processes written in the Python^3^ and C languages, but some will be located within the Gstreamer^4^ format, as plugins written in C. Gstreamer is a framework for passing video around and applying treatments to it. The Video for Linux^5^ package will be used interface with the cameras. Mysql^6^, a fast database system, will be used in the library. As already mentioned, image analogies^7^ will be used by the mangler, as will some effecTV^8^ effects. Other video processing might be done with the help of libraries such as MAlib^9^. The attention detector might use Gspy^10^, a ready made motion detection package. All of this is, of course, subject to change.

All this software is available under the GNU General Public License^11^ or compatible terms, meaning, for the purposes of this robot, that it is free and its workings are available for inspection or modification. The robot's code will itself be released under the GPL, so that others may use it.

The robot will be made from standard computer parts, which will be bought as work progresses. It is too early to say what will be necessary (and available), but here are some guesses:

The main components (ie, excluding the roving eyes) will not all fit in one processor. At the same time, they won't need a processor each (their separation is conceptual, not physical). I imagine they might be best spread out between two or three computers - the mangler, ego and id sitting comfortably away from the busy action around the library.

The computers won't need keyboards, mice , floppy disks, CD drives.

They will need a lot of RAM (memory).

The library will need between four and eight hundred gigabytes of disc storage (2 to 4 200GB discs). This would cost between up to four thousand dollars now, but will be cheaper by the time the disks are needed.

The cameras will probably be mid-price USB "webcam" cameras, so no special firewire or analog inputs will be required. Likewise, the output to the projector will be standard VGA. It is possible to get USB cameras for around \$50 now, but they do not produce great video. I will be looking for cameras that can capture 640 x 480 pixel video with good colours.

The roving eyes might be based on equipment borrowed from Citylink. They are willing to support "sufficiently silly projects that demonstrate something innovative" in the use of their networks.

The mechanical parts of the system will be made as simply as possible, out of whatever looks likely to work. The robot as object is not important - the moving image is the point.

I will begin work on the robot in the winter of 2003, and be finished by mid-2004.

Time

Hours upon hours, spread out over several months. To make the budget palatable I'm only asking for 26 weeks at \$500 per week.

13000

Network consultancy and costs

Includes traffic costs for a wee website.

2000

Materials

Computers, cameras, network equipment.

4000

Expenses

Electricity, Workshop space, research costs.

500

total

19,500

1. http://citylink.co.nz/ The Citylink people are keen on the idea, and won't charge for local network traffic.

2. Debian: http://www.debian.org

3. Python: http://www.python.org

4. Gstreamer: http://www.gstreamer.net

5. Video for Linux: http://www.exploits.org/v4l/

6. Mysql: http://mysql.com

7. Image analogies: http://mrl.nyu.edu/projects/image-analogies/

8. EffecTV: http://effectv.sourceforge.net/

9. MAlib: http://www.malib.net/

10.Gspy: http://gspy.sourceforge.net/

11. GPL. See http://www.gnu.org/copyleft/gpl.html